Blogs

Xplore_AI: A Matter of Trust

Written by: Chris Stephenson

XPLORE_AI: A Matter of Trust - JUNE 2024

It was all going so well last week at my first almost-summer cookout of 2024, until the conversation turned from the latest epidemic (Pickleball!) to what we all do for a living. This was the moment I was forced to admit publicly that I work in AI. The pained expressions on people’s faces at hearing this news ranged from pity to horror. Suddenly, what began as an innocent celebration of summer turned into defensive posturing and awkward segues to less upsetting topics like nuclear proliferation and rising sea levels. Of course, there were no friends lost, and no hard feelings. But the sentiment of fear and concern behind the comments and light-hearted jokes was palpable. Upon reflection, I began to blame the media’s lopsided, sensationalistic AI coverage. After all, it’s a well-documented fact that people respond more viscerally to negative information than positive. Then I heard that AI leaders at the SXSW Tech conference in March got a fairly cold reception, and actually received numerous audible groans and boos from the typically tech-friendly audience. The mood has shifted over the last year or so. So, what’s going on?

Views on AI Highly Mixed

It turns out, based on a range of studies conducted on attitudes about AI, overall sentiment does seem to be skewing negative.

Surveys conducted by the Pew Research Center confirm that Americans are in fact growing more and more cautious about the role of AI in their daily lives. Overall, 52% of Americans claim to be more concerned than excited, 36% are of mixed sentiment, and a paltry 10% say they are more excited than concerned.

Unsurprisingly, Europe has similarly mixed feelings on the topic. According to a study from the United Kingdom’s Centre for Data Ethics and Innovation, “While the majority of the public has a neutral view of AI’s likely impact on society, levels of pessimism have increased since last year” with the most common concerns centering on job displacement, de-skilling, and the atrophying of human problem-solving skills.

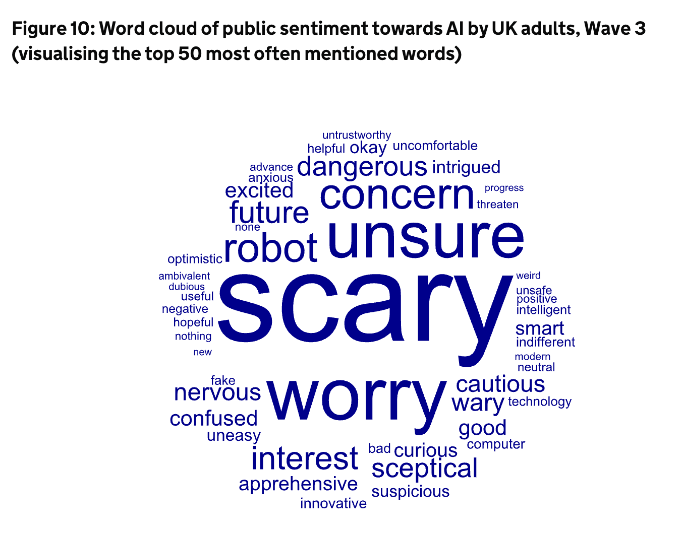

Picture below: United Kingdom’s Centre for Data Ethics and Innovation: Word cloud of public sentiment towards AI by UK adults and visualizing the top 50 most often mentioned words.

Additionally, a Morning Consult survey of a broader set of Europeans indicated that many believe society isn’t quite ready yet for AI, citing concerns about the spread of misinformation, child protection, and personal data privacy.

But here’s where things get interesting. While the range and level of concern varies geographically and demographically, there are some common themes emerging. Only three in ten Europeans trust generative AI with their personal data. And most tellingly, the top fear in both the UK and Spain is [wait for it]…AI’s irresponsible development by tech companies. There it is. After you cut through all the hype and hysteria about the technology, we find a more universal issue at the heart of all of this growing negativity: trust.

In fact, a study from Ipsos that looked at the issue at a global level, found a great deal of distrust in providers of AI, citing that less about a third of those polled in higher-income countries (e.g., US, Japan, Canada, Europe) said they trusted AI-enabled companies with their personal data or to protect against discriminative outcomes.

Trust is Foundational

“The greatest source of all human security is trust.” This powerful quote from Jeffrey Bleich truly captures the essence of this issue. I believe that if people trusted the organizations that develop, operate, and regulate AI, the general fear and negative sentiment would be greatly reduced.

As global leaders in enterprise data intelligence software, Nuix acknowledges the dual nature of AI, recognizing that while it holds immense capacity for driving positive change in business and society, it can also—without thoughtful guardrails and governance—cause unintentional harm. Generative AI is of particular concern because it is prone to producing hallucinations (or made-up facts), spreading misinformation, biased output, and facilitating scams and deep fakes. At Nuix, we view it as not only our professional duty but also our moral imperative to serve as responsible stewards of this potent technology in all its forms.

In order to earn and maintain the trust of our customers and partners we have set a high bar for our Responsible AI (RAI) standards. This includes, but is not limited to, the following disciplines:

- AI principles that demonstrate explainability, purpose-driven specificity, and end-user control over model creation and validation.

- A comprehensive AI Policy, founded on our core principles and guided by our mission to serve as a global force for good, and which drives a rigorous approach to Responsible AI governance.

- Diverse AI staffing to maximize fair representation and minimize bias at the point of creation.

- Cross-functional collaboration helps to ensure that we’re thinking about AI in a holistic fashion, as a multi-disciplinary capability across the entire organization.

- Accountability at the top, starting with our CEO and executive leadership team, with direct oversight from our General Counsel and global legal team.

Mistrust is not an option

Organizations from 70 countries use Nuix software and solutions to assist with challenges as diverse as criminal investigations, data privacy, eDiscovery, regulatory compliance, and insider threats. Our customers include the world’s top legal and advisory firms, all major financial regulators, the world’s largest banks, more than 400 national and regional police forces, and a wide range of major governmental institutions.

These organizations trust us to navigate the chaos and deliver ethical, transparent, and defensible technology. It’s a mission we take personally, and we cannot afford to get this wrong. This requires a careful balance of caution, urgency, innovation, and discipline. What’s cool about that is, when you get it right, and I feel like we are, it’s a lot of fun. We have some amazing things cooking!

However, until global sentiment changes, if you bump into my parents don’t tell them I work in AI…they still think I’m a bank robber.

Chris Stephenson

Head of AI Strategy & Operations